Hi Mike,

Thanks for the feedback on NOMADS. We recently found a slowness issue when

NOMADS is running out of our Boulder data center that is being worked on by

our teams now that NOMADS is live out of the College Park data center. It's

hard sometimes to quantify whether slowness issues that are only being

reported by a handful of users is a result of something wrong in our data

center, a bad network path between a customer (possibly just from a

particular region of the country) and our data center, a local issue on the

customers' end, or any other reason that might cause slowness.

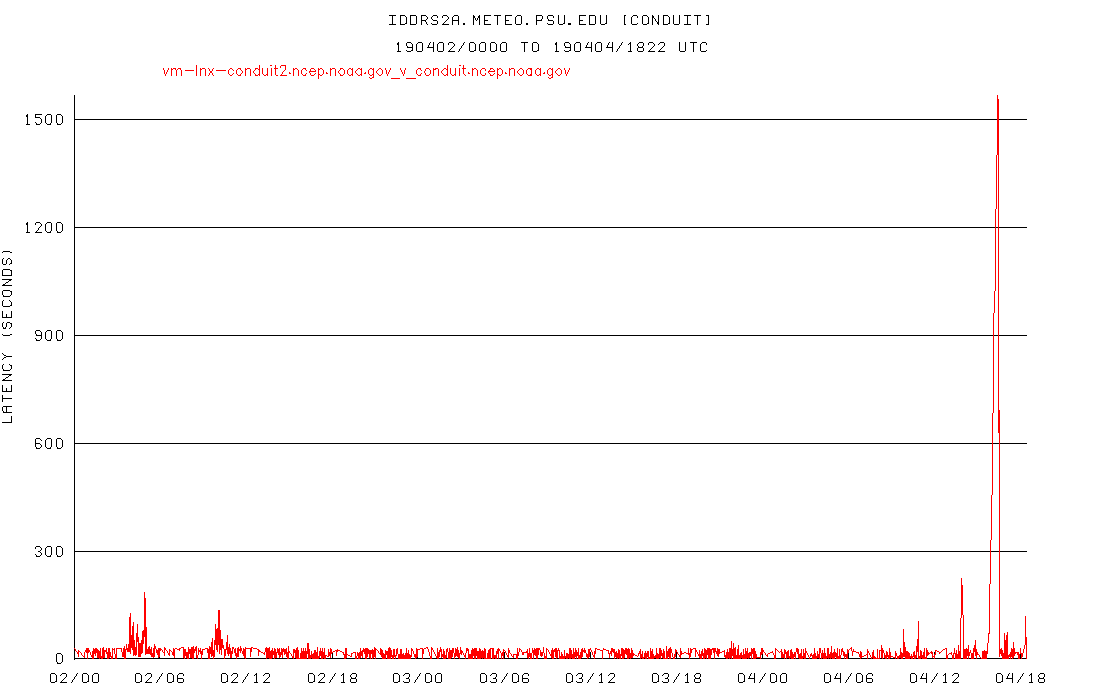

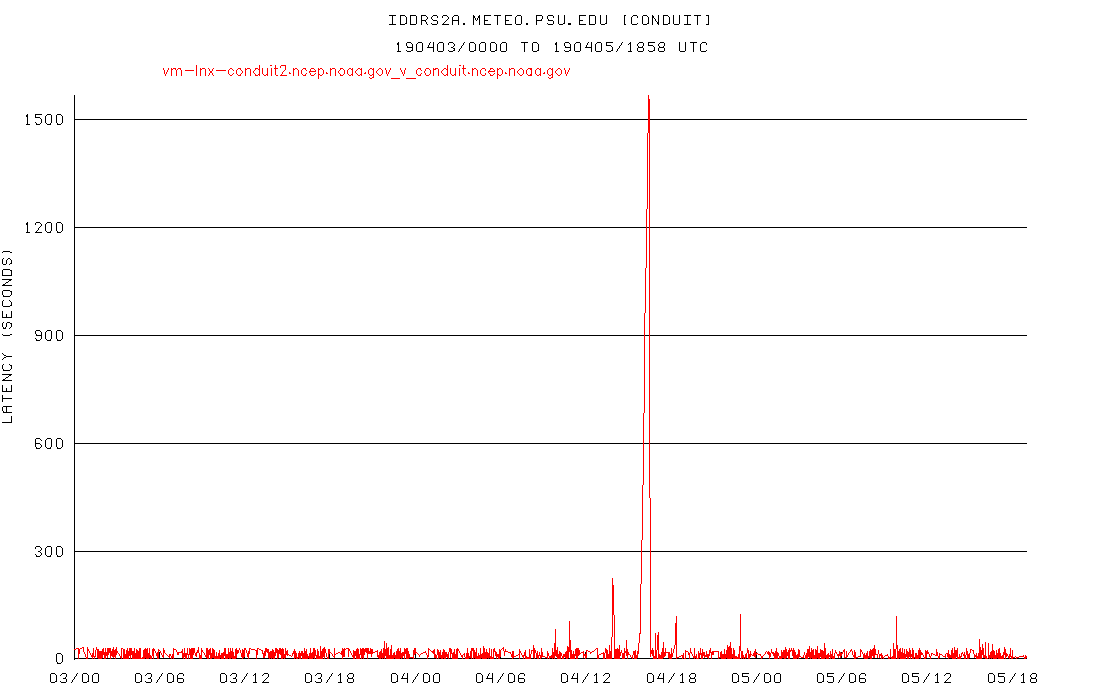

Conduit is *only* ever run from our College Park data center. It's slowness

is not tied into the Boulder NOMADS issue, but it does seem to be at least

a little bit tied to which of our data centers NOMADS is running out of.

When NOMADS is in Boulder along with the majority of our other NCEP

applications, the strain on the College Park data center is minimal and

Conduit appears to be running better as a result. When NOMADS runs in

College Park (as it has since late yesterday) there is more strain on the

data center and Conduit appears (based on provided user graphs) to run a

bit worse around peak model times as a result. These are just my

observations and we are still investigating what may have changed that

caused the Conduit latencies to appear in the first place so that we can

resolve this potential constraint.

-Dustin

On Tue, Apr 9, 2019 at 4:28 PM Mike Zuranski <zuranski@xxxxxxxxxxxxxxx>

wrote:

> Hi everyone,

>

> I've avoided jumping into this conversation since I don't deal much with

> Conduit these days, but Derek just mentioned something that I do have some

> applicable feedback on...

>

> > Two items happened last night. 1. NOMADS was moved back to College

> Park...

>

> We get nearly all of our model data via NOMADS. When it switched to

> Boulder last week we saw a significant drop in download speeds, down to a

> couple hundred KB/s or slower. Starting last night, we're back to speeds

> on the order of MB/s or tens of MB/s. Switching back to College Park seems

> to confirm for me something about routing from Boulder was responsible.

> But again this was all on NOMADS, not sure if it's related to happenings on

> Conduit.

>

> When I noticed this last week I sent an email to sdm@xxxxxxxx including a

> traceroute taken at the time, let me know if you'd like me to find that and

> pass it along here or someplace else.

>

> -Mike

>

> ======================

> Mike Zuranski

> Meteorology Support Analyst

> College of DuPage - Nexlab

> Weather.cod.edu

> ======================

>

>

> On Tue, Apr 9, 2019 at 10:51 AM Person, Arthur A. <aap1@xxxxxxx> wrote:

>

>> Derek,

>>

>>

>> Do we know what change might have been made around February 10th when the

>> CONDUIT problems first started happening? Prior to that time, the CONDUIT

>> feed had been very crisp for a long period of time.

>>

>>

>> Thanks... Art

>>

>>

>>

>> Arthur A. Person

>> Assistant Research Professor, System Administrator

>> Penn State Department of Meteorology and Atmospheric Science

>> email: aap1@xxxxxxx, phone: 814-863-1563 <callto:814-863-1563>

>>

>>

>> ------------------------------

>> *From:* Derek VanPelt - NOAA Affiliate <derek.vanpelt@xxxxxxxx>

>> *Sent:* Tuesday, April 9, 2019 11:34 AM

>> *To:* Holly Uhlenhake - NOAA Federal

>> *Cc:* Carissa Klemmer - NOAA Federal; Person, Arthur A.; Pete Pokrandt;

>> _NCEP.List.pmb-dataflow; conduit@xxxxxxxxxxxxxxxx;

>> support-conduit@xxxxxxxxxxxxxxxx

>> *Subject:* Re: [conduit] Large lags on CONDUIT feed - started a week or

>> so ago

>>

>> Hi all,

>>

>> Two items happened last night.

>>

>> 1. NOMADS was moved back to College Park, which means there was a lot

>> more traffic going out which will have effect on the Conduit latencies. We

>> do not have a full load from the COllege Park Servers as many of the other

>> applications are still running from Boulder, but NOMADS will certainly

>> increase overall load.

>>

>> 2. As Holly said, there were further issues delaying and changing the

>> timing of the model output yesterday afternoon/evening. I will be watching

>> from our end, and monitoring the Unidata 48 hour graph (thank you for the

>> link) throughout the day,

>>

>> Please let us know if you have questions or more information to help us

>> analyse what you are seeing.

>>

>> Thank you,

>>

>> Derek

>>

>>

>> On Tue, Apr 9, 2019 at 6:50 AM Holly Uhlenhake - NOAA Federal <

>> holly.uhlenhake@xxxxxxxx> wrote:

>>

>> Hi Pete,

>>

>> We also had an issue on the supercomputer yesterday where several models

>> going to conduit would have been stacked on top of each other instead of

>> coming out in a more spread out fashion. It's not inconceivable that

>> conduit could have backed up working through the abnormally large glut of

>> grib messages. Are things better this morning at all?

>>

>> Thanks,

>> Holly

>>

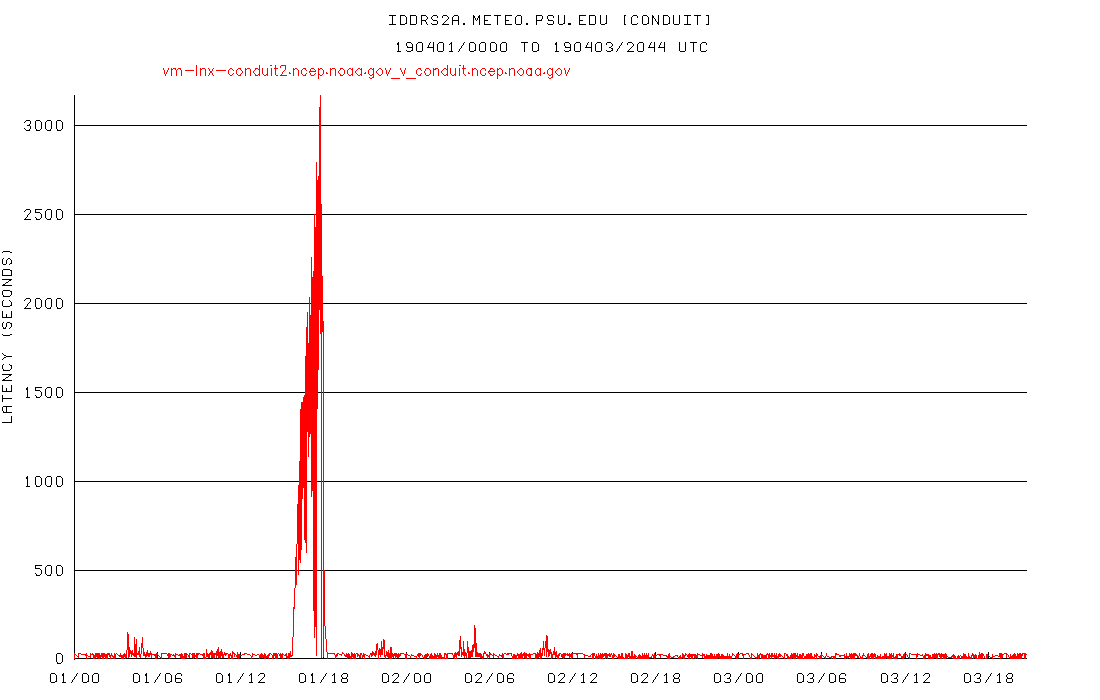

>> On Tue, Apr 9, 2019 at 12:37 AM Pete Pokrandt <poker@xxxxxxxxxxxx> wrote:

>>

>> Something changed starting with today's 18 UTC model cycle, and our lags

>> shot up to over 3600 seconds, where we started losing data. They are

>> growing again now with the 00 UTC cycle as well. PSU and Unidata CONDUIT

>> stats show similar abnormally large lags.

>>

>> FYI.

>> Pete

>>

>>

>>

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fwww.weather.com%2Ftv%2Fshows%2Fwx-geeks%2Fvideo%2Fthe-incredible-shrinking-cold-pool&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262069725&sdata=ZmTVJ%2FCeylvBSnE4bfbvXoZEMi1qTxGIsV0ePOJmr0A%3D&reserved=0>

>> --

>> Pete Pokrandt - Systems Programmer

>> UW-Madison Dept of Atmospheric and Oceanic Sciences

>> 608-262-3086 - poker@xxxxxxxxxxxx

>>

>> ------------------------------

>> *From:* Person, Arthur A. <aap1@xxxxxxx>

>> *Sent:* Friday, April 5, 2019 2:10 PM

>> *To:* Carissa Klemmer - NOAA Federal

>> *Cc:* Pete Pokrandt; Derek VanPelt - NOAA Affiliate; Gilbert Sebenste;

>> conduit@xxxxxxxxxxxxxxxx; _NCEP.List.pmb-dataflow;

>> support-conduit@xxxxxxxxxxxxxxxx

>> *Subject:* Re: Large lags on CONDUIT feed - started a week or so ago

>>

>>

>> Carissa,

>>

>>

>> The Boulder connection is definitely performing very well for CONDUIT.

>> Although there have been a couple of little blips (~ 120 seconds) since

>> yesterday, overall the performance is superb. I don't think it's quite as

>> clean as prior to the ~February 10th date when the D.C. connection went

>> bad, but it's still excellent performance. Here's our graph now with a

>> single connection (no splits):

>>

>>

>> My next question is: Will CONDUIT stay pointing at Boulder until D.C. is

>> fixed, or might you be required to switch back to D.C. at some point before

>> that?

>>

>>

>> Thanks... Art

>>

>>

>> Arthur A. Person

>> Assistant Research Professor, System Administrator

>> Penn State Department of Meteorology and Atmospheric Science

>> email: aap1@xxxxxxx, phone: 814-863-1563 <callto:814-863-1563>

>>

>>

>> ------------------------------

>> *From:* Carissa Klemmer - NOAA Federal <carissa.l.klemmer@xxxxxxxx>

>> *Sent:* Thursday, April 4, 2019 6:22 PM

>> *To:* Person, Arthur A.

>> *Cc:* Pete Pokrandt; Derek VanPelt - NOAA Affiliate; Gilbert Sebenste;

>> conduit@xxxxxxxxxxxxxxxx; _NCEP.List.pmb-dataflow;

>> support-conduit@xxxxxxxxxxxxxxxx

>> *Subject:* Re: Large lags on CONDUIT feed - started a week or so ago

>>

>> Catching up here.

>>

>> Derek,

>> Do we have traceroutes from all users? Does anything in VCenter show any

>> system resource constraints?

>>

>> On Thursday, April 4, 2019, Person, Arthur A. <aap1@xxxxxxx> wrote:

>>

>> Yeh, definitely looks "blipier" starting around 7Z this morning, but

>> nothing like it was before. And all last night was clean. Here's our

>> graph with a 2-way split, a huge improvement over what it was before the

>> switch to Boulder:

>>

>>

>>

>> Agree with Pete that this morning's data probably isn't a good test since

>> there were other factors. Since this seems so much better, I'm going to

>> try switching to no split as an experiment and see how it holds up.

>>

>>

>> Art

>>

>>

>> Arthur A. Person

>> Assistant Research Professor, System Administrator

>> Penn State Department of Meteorology and Atmospheric Science

>> email: aap1@xxxxxxx, phone: 814-863-1563 <callto:814-863-1563>

>>

>>

>> ------------------------------

>> *From:* Pete Pokrandt <poker@xxxxxxxxxxxx>

>> *Sent:* Thursday, April 4, 2019 1:51 PM

>> *To:* Derek VanPelt - NOAA Affiliate

>> *Cc:* Person, Arthur A.; Gilbert Sebenste; Anne Myckow - NOAA Affiliate;

>> conduit@xxxxxxxxxxxxxxxx; _NCEP.List.pmb-dataflow;

>> support-conduit@xxxxxxxxxxxxxxxx

>> *Subject:* Re: [Ncep.list.pmb-dataflow] [conduit] Large lags on CONDUIT

>> feed - started a week or so ago

>>

>> Ah, so perhaps not a good test.. I'll set it back to a 5-way split and

>> see how it looks tomorrow.

>>

>> Thanks for the info,

>> Pete

>>

>>

>>

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fwww.weather.com%2Ftv%2Fshows%2Fwx-geeks%2Fvideo%2Fthe-incredible-shrinking-cold-pool&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262079726&sdata=nfgLZrEi9nKlsx20RpNyyHQVunPIH3Bwu5t2gMnhFYI%3D&reserved=0>

>> --

>> Pete Pokrandt - Systems Programmer

>> UW-Madison Dept of Atmospheric and Oceanic Sciences

>> 608-262-3086 - poker@xxxxxxxxxxxx

>>

>> ------------------------------

>> *From:* Derek VanPelt - NOAA Affiliate <derek.vanpelt@xxxxxxxx>

>> *Sent:* Thursday, April 4, 2019 12:38 PM

>> *To:* Pete Pokrandt

>> *Cc:* Person, Arthur A.; Gilbert Sebenste; Anne Myckow - NOAA Affiliate;

>> conduit@xxxxxxxxxxxxxxxx; _NCEP.List.pmb-dataflow;

>> support-conduit@xxxxxxxxxxxxxxxx

>> *Subject:* Re: [Ncep.list.pmb-dataflow] [conduit] Large lags on CONDUIT

>> feed - started a week or so ago

>>

>> HI Pete -- we did have a separate issu hit the CONDUIT feed today. We

>> should be recovering now, but the backlog was sizeable. If these numbers

>> are not back to the baseline in the next hour or so please let us know. We

>> are also watching our queues and they are decreasing, but not as quickly as

>> we had hoped.

>>

>> Thank you,

>>

>> Derek

>>

>> On Thu, Apr 4, 2019 at 1:26 PM 'Pete Pokrandt' via _NCEP

>> list.pmb-dataflow <ncep.list.pmb-dataflow@xxxxxxxx> wrote:

>>

>> FYI - there is still a much larger lag for the 12 UTC run with a 5-way

>> split compared to a 10-way split. It's better since everything else failed

>> over to Boulder, but I'd venture to guess that's not the root of the

>> problem.

>>

>>

>>

>> Prior to whatever is going on to cause this, I don'r recall ever seeing

>> lags this large with a 5-way split. It looked much more like the left hand

>> side of this graph, with small increases in lag with each 6 hourly model

>> run cycle, but more like 100 seconds vs the ~900 that I got this morning .

>>

>> FYI I am going to change back to a 10 way split for now.

>>

>> Pete

>>

>>

>>

>>

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fwww.weather.com%2Ftv%2Fshows%2Fwx-geeks%2Fvideo%2Fthe-incredible-shrinking-cold-pool&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262079726&sdata=nfgLZrEi9nKlsx20RpNyyHQVunPIH3Bwu5t2gMnhFYI%3D&reserved=0>

>> --

>> Pete Pokrandt - Systems Programmer

>> UW-Madison Dept of Atmospheric and Oceanic Sciences

>> 608-262-3086 - poker@xxxxxxxxxxxx

>>

>> ------------------------------

>> *From:* conduit-bounces@xxxxxxxxxxxxxxxx <

>> conduit-bounces@xxxxxxxxxxxxxxxx> on behalf of Pete Pokrandt <

>> poker@xxxxxxxxxxxx>

>> *Sent:* Wednesday, April 3, 2019 4:57 PM

>> *To:* Person, Arthur A.; Gilbert Sebenste; Anne Myckow - NOAA Affiliate

>> *Cc:* conduit@xxxxxxxxxxxxxxxx; _NCEP.List.pmb-dataflow;

>> support-conduit@xxxxxxxxxxxxxxxx

>> *Subject:* Re: [conduit] [Ncep.list.pmb-dataflow] Large lags on CONDUIT

>> feed - started a week or so ago

>>

>> Sorry, was out this morning and just had a chance to look into this. I

>> concur with Art and Gilbert that things appear to have gotten better

>> starting with the failover of everything else to Boulder yesterday. I will

>> also reconfigure to go back to a 5-way split (as opposed to the 10-way

>> split that I've been using since this issue began) and keep an eye on

>> tomorrow's 12 UTC model run cycle - if the lags go up, it usually happens

>> worst during that cycle, shortly before 18 UTC each day.

>>

>> I'll report back tomorrow how it looks, or you can see at

>>

>>

>> http://rtstats.unidata.ucar.edu/cgi-bin/rtstats/iddstats_nc?CONDUIT+idd.aos.wisc.edu

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Frtstats.unidata.ucar.edu%2Fcgi-bin%2Frtstats%2Fiddstats_nc%3FCONDUIT%2Bidd.aos.wisc.edu&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262089730&sdata=C0zwKdhnNi0Jpqteh%2B4yazfSjJHCj%2B9hSwE%2F1UOX29U%3D&reserved=0>

>>

>> Thanks,

>> Pete

>>

>>

>>

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fwww.weather.com%2Ftv%2Fshows%2Fwx-geeks%2Fvideo%2Fthe-incredible-shrinking-cold-pool&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262099739&sdata=azCKUDriBN8O5WS3ytUMHYGjSEKvBQ483y%2FJPUjdIgQ%3D&reserved=0>

>> --

>> Pete Pokrandt - Systems Programmer

>> UW-Madison Dept of Atmospheric and Oceanic Sciences

>> 608-262-3086 - poker@xxxxxxxxxxxx

>>

>> ------------------------------

>> *From:* conduit-bounces@xxxxxxxxxxxxxxxx <

>> conduit-bounces@xxxxxxxxxxxxxxxx> on behalf of Person, Arthur A. <

>> aap1@xxxxxxx>

>> *Sent:* Wednesday, April 3, 2019 4:04 PM

>> *To:* Gilbert Sebenste; Anne Myckow - NOAA Affiliate

>> *Cc:* conduit@xxxxxxxxxxxxxxxx; _NCEP.List.pmb-dataflow;

>> support-conduit@xxxxxxxxxxxxxxxx

>> *Subject:* Re: [conduit] [Ncep.list.pmb-dataflow] Large lags on CONDUIT

>> feed - started a week or so ago

>>

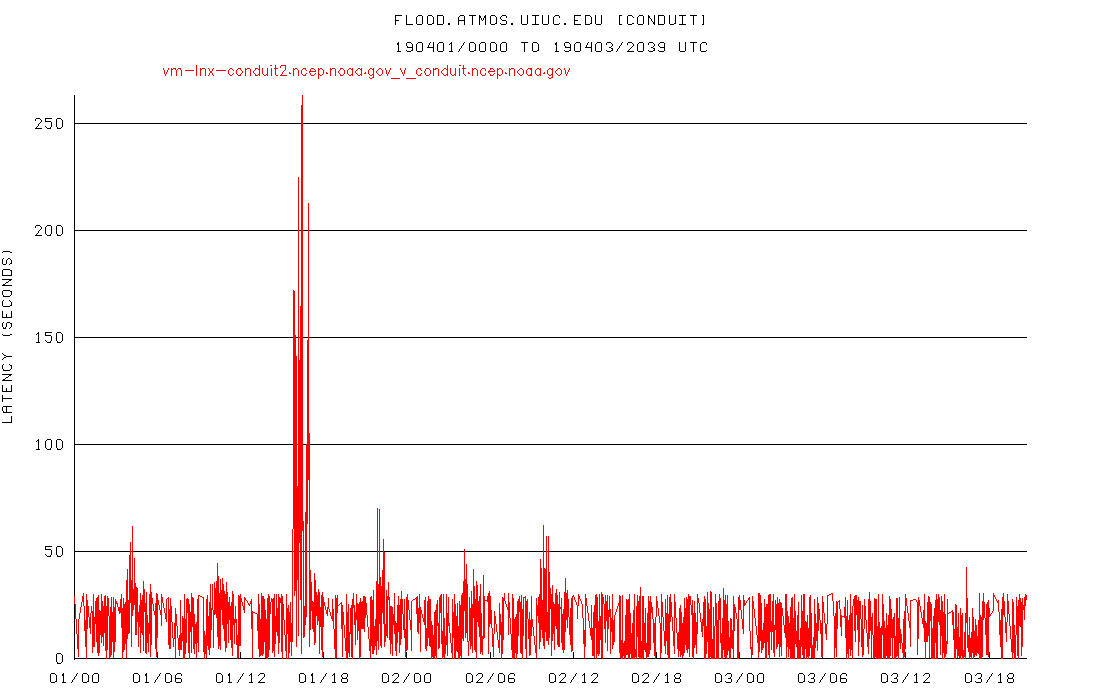

>>

>> Anne,

>>

>>

>> I'll hop back in the loop here... for some reason these replies started

>> going into my junk file (bleh). Anyway, I agree with Gilbert's

>> assessment. Things turned real clean around 12Z yesterday, looking at the

>> graphs. I usually look at flood.atmos.uiuc.edu

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fflood.atmos.uiuc.edu&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262099739&sdata=OEfEpm9%2BfxCxC5RQjY9wvKjsuLBaDymLq669BuMmyRE%3D&reserved=0>

>> when there are problem as their connection always seems to be the

>> cleanest. If there are even small blips or ups and downs in their

>> latencies, that usually means there's a network aberration somewhere that

>> usually amplifies into hundreds or thousands of seconds at our site and

>> elsewhere. Looking at their graph now, you can see the blipiness up until

>> 12Z yesterday, and then it's flat (except for the one spike around 16Z

>> today which I would ignore):

>>

>>

>>

>> Our direct-connected site, which is using a 10-way split right now, also

>> shows a return to calmness in the latencies:

>>

>>

>> Prior to the recent latency jump, I did not use split requests and the

>> reception had been stellar for quite some time. It's my suspicion that

>> this is a networking congestion issue somewhere close to the source since

>> it seems to affect all downstream sites. For that reason, I don't think

>> solving this problem should necessarily involve upgrading your server

>> software, but rather identifying what's jamming up the network near D.C.,

>> and testing this by switching to Boulder was an excellent idea. I will now

>> try switching our system to a two-way split to see if this performance

>> holds up with fewer pipes. Thanks for your help and I'll let you know

>> what I find out.

>>

>>

>> Art

>>

>>

>> Arthur A. Person

>> Assistant Research Professor, System Administrator

>> Penn State Department of Meteorology and Atmospheric Science

>> email: aap1@xxxxxxx, phone: 814-863-1563 <callto:814-863-1563>

>>

>>

>> ------------------------------

>> *From:* conduit-bounces@xxxxxxxxxxxxxxxx <

>> conduit-bounces@xxxxxxxxxxxxxxxx> on behalf of Gilbert Sebenste <

>> gilbert@xxxxxxxxxxxxxxxx>

>> *Sent:* Wednesday, April 3, 2019 4:07 PM

>> *To:* Anne Myckow - NOAA Affiliate

>> *Cc:* conduit@xxxxxxxxxxxxxxxx; _NCEP.List.pmb-dataflow;

>> support-conduit@xxxxxxxxxxxxxxxx

>> *Subject:* Re: [conduit] [Ncep.list.pmb-dataflow] Large lags on CONDUIT

>> feed - started a week or so ago

>>

>> Hello Anne,

>>

>> I'll jump in here as well. Consider the CONDUIT delays at UNIDATA:

>>

>>

>> http://rtstats.unidata.ucar.edu/cgi-bin/rtstats/iddstats_nc?CONDUIT+conduit.unidata.ucar.edu

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Frtstats.unidata.ucar.edu%2Fcgi-bin%2Frtstats%2Fiddstats_nc%3FCONDUIT%2Bconduit.unidata.ucar.edu&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262109744&sdata=gTeKW1u6tq4bdO8jRpmrxYQYG%2BDwwNUZBCseIInuftQ%3D&reserved=0>

>>

>>

>> And now, Wisconsin:

>>

>>

>> http://rtstats.unidata.ucar.edu/cgi-bin/rtstats/iddstats_nc?CONDUIT+idd.aos.wisc.edu

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Frtstats.unidata.ucar.edu%2Fcgi-bin%2Frtstats%2Fiddstats_nc%3FCONDUIT%2Bidd.aos.wisc.edu&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262109744&sdata=7u4RkBCyKcWO16hQ9yieCa%2Bi2y%2BwhOWHtKPtI679dCI%3D&reserved=0>

>>

>>

>> And finally, the University of Washington:

>>

>>

>> http://rtstats.unidata.ucar.edu/cgi-bin/rtstats/iddstats_nc?CONDUIT+freshair1.atmos.washington.edu

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Frtstats.unidata.ucar.edu%2Fcgi-bin%2Frtstats%2Fiddstats_nc%3FCONDUIT%2Bfreshair1.atmos.washington.edu&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262119753&sdata=31845ZclhIciL%2F3nuo682EDU%2FEiJXjXogqUqOUzNji4%3D&reserved=0>

>>

>>

>> All three of whom have direct feeds from you. Flipping over to Boulder

>> definitely caused a major improvement. There was still a brief spike in

>> delay, but much shorter and minimal

>> compared to what it was.

>>

>> Gilbert

>>

>> On Wed, Apr 3, 2019 at 10:03 AM Anne Myckow - NOAA Affiliate <

>> anne.myckow@xxxxxxxx> wrote:

>>

>> Hi Pete,

>>

>> As of yesterday we failed almost all of our applications to our site in

>> Boulder (meaning away from CONDUIT). Have you noticed an improvement in

>> your speeds since yesterday afternoon? If so this will give us a clue that

>> maybe there's something interfering on our side that isn't specifically

>> CONDUIT, but another app that might be causing congestion. (And if it's the

>> same then that's a clue in the other direction.)

>>

>> Thanks,

>> Anne

>>

>> On Mon, Apr 1, 2019 at 3:24 PM Pete Pokrandt <poker@xxxxxxxxxxxx> wrote:

>>

>> The lag here at UW-Madison was up to 1200 seconds today, and that's with

>> a 10-way split feed. Whatever is causing the issue has definitely not been

>> resolved, and historically is worse during the work week than on the

>> weekends. If that helps at all.

>>

>> Pete

>>

>>

>>

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fwww.weather.com%2Ftv%2Fshows%2Fwx-geeks%2Fvideo%2Fthe-incredible-shrinking-cold-pool&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262129771&sdata=bVTgXqm4bybswNIYttm3NeKaN5prjmUDwOpFXphUMCw%3D&reserved=0>

>> --

>> Pete Pokrandt - Systems Programmer

>> UW-Madison Dept of Atmospheric and Oceanic Sciences

>> 608-262-3086 - poker@xxxxxxxxxxxx

>>

>> ------------------------------

>> *From:* Anne Myckow - NOAA Affiliate <anne.myckow@xxxxxxxx>

>> *Sent:* Thursday, March 28, 2019 4:28 PM

>> *To:* Person, Arthur A.

>> *Cc:* Carissa Klemmer - NOAA Federal; Pete Pokrandt;

>> _NCEP.List.pmb-dataflow; conduit@xxxxxxxxxxxxxxxx;

>> support-conduit@xxxxxxxxxxxxxxxx

>> *Subject:* Re: [Ncep.list.pmb-dataflow] Large lags on CONDUIT feed -

>> started a week or so ago

>>

>> Hello Art,

>>

>> We will not be upgrading to version 6.13 on these systems as they are not

>> robust enough to support the local logging inherent in the new version.

>>

>> I will check in with my team on if there are any further actions we can

>> take to try and troubleshoot this issue, but I fear we may be at the limit

>> of our ability to make this better.

>>

>> I’ll let you know tomorrow where we stand. Thanks.

>> Anne

>>

>> On Mon, Mar 25, 2019 at 3:00 PM Person, Arthur A. <aap1@xxxxxxx> wrote:

>>

>> Carissa,

>>

>>

>> Can you report any status on this inquiry?

>>

>>

>> Thanks... Art

>>

>>

>> Arthur A. Person

>> Assistant Research Professor, System Administrator

>> Penn State Department of Meteorology and Atmospheric Science

>> email: aap1@xxxxxxx, phone: 814-863-1563 <callto:814-863-1563>

>>

>>

>> ------------------------------

>> *From:* Carissa Klemmer - NOAA Federal <carissa.l.klemmer@xxxxxxxx>

>> *Sent:* Tuesday, March 12, 2019 8:30 AM

>> *To:* Pete Pokrandt

>> *Cc:* Person, Arthur A.; conduit@xxxxxxxxxxxxxxxx;

>> support-conduit@xxxxxxxxxxxxxxxx; _NCEP.List.pmb-dataflow

>> *Subject:* Re: Large lags on CONDUIT feed - started a week or so ago

>>

>> Hi Everyone

>>

>> I’ve added the Dataflow team email to the thread. I haven’t heard that

>> any changes were made or that any issues were found. But the team can look

>> today and see if we have any signifiers of overall slowness with anything.

>>

>> Dataflow, try taking a look at the new Citrix or VM troubleshooting tools

>> if there are any abnormal signatures that may explain this.

>>

>> On Monday, March 11, 2019, Pete Pokrandt <poker@xxxxxxxxxxxx> wrote:

>>

>> Art,

>>

>> I don't know if NCEP ever figured anything out, but I've been able to

>> keep my latencies reasonable (300-600s max, mostly during the 12 UTC model

>> suite) by splitting my CONDUIT request 10 ways, instead of the 5 that I had

>> been doing, or in a single request. Maybe give that a try and see if it

>> helps at all.

>>

>> Pete

>>

>>

>>

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fwww.weather.com%2Ftv%2Fshows%2Fwx-geeks%2Fvideo%2Fthe-incredible-shrinking-cold-pool&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262129771&sdata=bVTgXqm4bybswNIYttm3NeKaN5prjmUDwOpFXphUMCw%3D&reserved=0>

>> --

>> Pete Pokrandt - Systems Programmer

>> UW-Madison Dept of Atmospheric and Oceanic Sciences

>> 608-262-3086 - poker@xxxxxxxxxxxx

>>

>> ------------------------------

>> *From:* Person, Arthur A. <aap1@xxxxxxx>

>> *Sent:* Monday, March 11, 2019 3:45 PM

>> *To:* Holly Uhlenhake - NOAA Federal; Pete Pokrandt

>> *Cc:* conduit@xxxxxxxxxxxxxxxx; support-conduit@xxxxxxxxxxxxxxxx

>> *Subject:* Re: [conduit] Large lags on CONDUIT feed - started a week or

>> so ago

>>

>>

>> Holly,

>>

>>

>> Was there any resolution to this on the NCEP end? I'm still seeing

>> terrible delays (1000-4000 seconds) receiving data from

>> conduit.ncep.noaa.gov

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fconduit.ncep.noaa.gov&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262139772&sdata=g9nb3WC8rR%2F65E%2FwZg%2BAwl7JDuNM6OV6ty5YNNg0YQ8%3D&reserved=0>.

>> It would be helpful to know if things are resolved at NCEP's end so I know

>> whether to look further down the line.

>>

>>

>> Thanks... Art

>>

>>

>> Arthur A. Person

>> Assistant Research Professor, System Administrator

>> Penn State Department of Meteorology and Atmospheric Science

>> email: aap1@xxxxxxx, phone: 814-863-1563 <callto:814-863-1563>

>>

>>

>> ------------------------------

>> *From:* conduit-bounces@xxxxxxxxxxxxxxxx <

>> conduit-bounces@xxxxxxxxxxxxxxxx> on behalf of Holly Uhlenhake - NOAA

>> Federal <holly.uhlenhake@xxxxxxxx>

>> *Sent:* Thursday, February 21, 2019 12:05 PM

>> *To:* Pete Pokrandt

>> *Cc:* conduit@xxxxxxxxxxxxxxxx; support-conduit@xxxxxxxxxxxxxxxx

>> *Subject:* Re: [conduit] Large lags on CONDUIT feed - started a week or

>> so ago

>>

>> Hi Pete,

>>

>> We'll take a look and see if we can figure out what might be going on.

>> We haven't done anything to try and address this yet, but based on your

>> analysis I'm suspicious that it might be tied to a resource constraint on

>> the VM or the blade it resides on.

>>

>> Thanks,

>> Holly Uhlenhake

>> Acting Dataflow Team Lead

>>

>> On Thu, Feb 21, 2019 at 11:32 AM Pete Pokrandt <poker@xxxxxxxxxxxx>

>> wrote:

>>

>> Just FYI, data is flowing, but the large lags continue.

>>

>>

>> http://rtstats.unidata.ucar.edu/cgi-bin/rtstats/iddstats_nc?CONDUIT+idd.aos.wisc.edu

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Frtstats.unidata.ucar.edu%2Fcgi-bin%2Frtstats%2Fiddstats_nc%3FCONDUIT%2Bidd.aos.wisc.edu&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262139772&sdata=llapIFZjpMj%2F8T3WDF5rL8yqOJs6lCOg6z39xzhfZAQ%3D&reserved=0>

>>

>> http://rtstats.unidata.ucar.edu/cgi-bin/rtstats/iddstats_nc?CONDUIT+conduit.unidata.ucar.edu

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Frtstats.unidata.ucar.edu%2Fcgi-bin%2Frtstats%2Fiddstats_nc%3FCONDUIT%2Bconduit.unidata.ucar.edu&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262149772&sdata=cDjmr2BO09PdgUDjkTaIMAVzNaBZG8zoI2DlLM34ffg%3D&reserved=0>

>>

>> Pete

>>

>>

>>

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fwww.weather.com%2Ftv%2Fshows%2Fwx-geeks%2Fvideo%2Fthe-incredible-shrinking-cold-pool&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262159781&sdata=hzjBmCU6jS8yHYLmO%2FItc68jCbz790Z6x7960AWFJcI%3D&reserved=0>

>> --

>> Pete Pokrandt - Systems Programmer

>> UW-Madison Dept of Atmospheric and Oceanic Sciences

>> 608-262-3086 - poker@xxxxxxxxxxxx

>>

>> ------------------------------

>> *From:* conduit-bounces@xxxxxxxxxxxxxxxx <

>> conduit-bounces@xxxxxxxxxxxxxxxx> on behalf of Pete Pokrandt <

>> poker@xxxxxxxxxxxx>

>> *Sent:* Wednesday, February 20, 2019 12:07 PM

>> *To:* Carissa Klemmer - NOAA Federal

>> *Cc:* conduit@xxxxxxxxxxxxxxxx; support-conduit@xxxxxxxxxxxxxxxx

>> *Subject:* Re: [conduit] Large lags on CONDUIT feed - started a week or

>> so ago

>>

>> Data is flowing again - picked up somewhere in the GEFS. Maybe CONDUIT

>> server was restarted, or ldm on it? Lags are large (3000s+) but dropping

>> slowly

>>

>> Pete

>>

>>

>>

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fwww.weather.com%2Ftv%2Fshows%2Fwx-geeks%2Fvideo%2Fthe-incredible-shrinking-cold-pool&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262159781&sdata=hzjBmCU6jS8yHYLmO%2FItc68jCbz790Z6x7960AWFJcI%3D&reserved=0>

>> --

>> Pete Pokrandt - Systems Programmer

>> UW-Madison Dept of Atmospheric and Oceanic Sciences

>> 608-262-3086 - poker@xxxxxxxxxxxx

>>

>> ------------------------------

>> *From:* conduit-bounces@xxxxxxxxxxxxxxxx <

>> conduit-bounces@xxxxxxxxxxxxxxxx> on behalf of Pete Pokrandt <

>> poker@xxxxxxxxxxxx>

>> *Sent:* Wednesday, February 20, 2019 11:56 AM

>> *To:* Carissa Klemmer - NOAA Federal

>> *Cc:* conduit@xxxxxxxxxxxxxxxx; support-conduit@xxxxxxxxxxxxxxxx

>> *Subject:* Re: [conduit] Large lags on CONDUIT feed - started a week or

>> so ago

>>

>> Just a quick follow-up - we started falling far enough behind (3600+ sec)

>> that we are losing data. We got short files starting at 174h into the GFS

>> run, and only got (incomplete) data through 207h.

>>

>> We have now not received any data on CONDUIT since 11:27 AM CST (1727

>> UTC) today (Wed Feb 20)

>>

>> Pete

>>

>>

>>

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fwww.weather.com%2Ftv%2Fshows%2Fwx-geeks%2Fvideo%2Fthe-incredible-shrinking-cold-pool&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262169786&sdata=M8%2BWo8v1Gdkfo5UseX%2FAZ3Q2T07nEJy3DFqDJvaqT8Q%3D&reserved=0>

>> --

>> Pete Pokrandt - Systems Programmer

>> UW-Madison Dept of Atmospheric and Oceanic Sciences

>> 608-262-3086 - poker@xxxxxxxxxxxx

>>

>> ------------------------------

>> *From:* conduit-bounces@xxxxxxxxxxxxxxxx <

>> conduit-bounces@xxxxxxxxxxxxxxxx> on behalf of Pete Pokrandt <

>> poker@xxxxxxxxxxxx>

>> *Sent:* Wednesday, February 20, 2019 11:28 AM

>> *To:* Carissa Klemmer - NOAA Federal

>> *Cc:* conduit@xxxxxxxxxxxxxxxx; support-conduit@xxxxxxxxxxxxxxxx

>> *Subject:* [conduit] Large lags on CONDUIT feed - started a week or so

>> ago

>>

>> Carissa,

>>

>> We have been feeding CONDUIT using a 5 way split feed direct from

>> conduit.ncep.noaa.gov

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fconduit.ncep.noaa.gov&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262169786&sdata=pwQ8RllTCU%2BOL0uliFgC9G0V%2F6WK%2FhLaOn%2B2QL%2F1pcE%3D&reserved=0>,

>> and it had been really good for some time, lags 30-60 seconds or less.

>>

>> However, the past week or so, we've been seeing some very large lags

>> during each 6 hour model suite - Unidata is also seeing these - they are

>> also feeding direct from conduit.ncep.noaa.gov

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fconduit.ncep.noaa.gov&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262179795&sdata=1xxCP%2FafYbMXdcLNR%2FIuy9Y0tBDO%2FKWbBYsAPjLov6w%3D&reserved=0>

>> .

>>

>>

>> http://rtstats.unidata.ucar.edu/cgi-bin/rtstats/iddstats_nc?CONDUIT+idd.aos.wisc.edu

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Frtstats.unidata.ucar.edu%2Fcgi-bin%2Frtstats%2Fiddstats_nc%3FCONDUIT%2Bidd.aos.wisc.edu&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262189808&sdata=qJfe%2FdGVmh8p3E%2BaMelwyjvLsxXOuodmdjYhfGM%2BbH0%3D&reserved=0>

>>

>>

>> http://rtstats.unidata.ucar.edu/cgi-bin/rtstats/iddstats_nc?CONDUIT+conduit.unidata.ucar.edu

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Frtstats.unidata.ucar.edu%2Fcgi-bin%2Frtstats%2Fiddstats_nc%3FCONDUIT%2Bconduit.unidata.ucar.edu&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262189808&sdata=JFmzqxz80IM%2FSiNV1zl6QeWwcIH8miS8zTyFarxqS8M%3D&reserved=0>

>>

>>

>> Any idea what's going on, or how we can find out?

>>

>> Thanks!

>> Pete

>>

>>

>>

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fwww.weather.com%2Ftv%2Fshows%2Fwx-geeks%2Fvideo%2Fthe-incredible-shrinking-cold-pool&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262199813&sdata=6U6qIL0jgRoiQ742NlkHr344BFJqthlSVRGoKuyovQU%3D&reserved=0>

>> --

>> Pete Pokrandt - Systems Programmer

>> UW-Madison Dept of Atmospheric and Oceanic Sciences

>> 608-262-3086 - poker@xxxxxxxxxxxx

>> _______________________________________________

>> NOTE: All exchanges posted to Unidata maintained email lists are

>> recorded in the Unidata inquiry tracking system and made publicly

>> available through the web. Users who post to any of the lists we

>> maintain are reminded to remove any personal information that they

>> do not want to be made public.

>>

>>

>> conduit mailing list

>> conduit@xxxxxxxxxxxxxxxx

>> For list information or to unsubscribe, visit:

>> http://www.unidata.ucar.edu/mailing_lists/

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fwww.unidata.ucar.edu%2Fmailing_lists%2F&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262199813&sdata=cTlTde6vBUC%2Bc%2BPnOlfuJsSbdma9urfLTGB5CXjxtxc%3D&reserved=0>

>>

>>

>>

>> --

>> Carissa Klemmer

>> NCEP Central Operations

>> IDSB Branch Chief

>> 301-683-3835

>>

>> _______________________________________________

>> Ncep.list.pmb-dataflow mailing list

>> Ncep.list.pmb-dataflow@xxxxxxxxxxxxxxxxxxxx

>> https://www.lstsrv.ncep.noaa.gov/mailman/listinfo/ncep.list.pmb-dataflow

>> <https://nam01.safelinks.protection.outlook.com/?url=https%3A%2F%2Fwww.lstsrv.ncep.noaa.gov%2Fmailman%2Flistinfo%2Fncep.list.pmb-dataflow&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262209822&sdata=mbUF1EKDyPVMZlUnjn86XQ55KuTqbJIZYaDKwOFuy60%3D&reserved=0>

>>

>> --

>> Anne Myckow

>> Lead Dataflow Analyst

>> NOAA/NCEP/NCO

>> 301-683-3825

>>

>>

>>

>> --

>> Anne Myckow

>> Lead Dataflow Analyst

>> NOAA/NCEP/NCO

>> 301-683-3825

>> _______________________________________________

>> NOTE: All exchanges posted to Unidata maintained email lists are

>> recorded in the Unidata inquiry tracking system and made publicly

>> available through the web. Users who post to any of the lists we

>> maintain are reminded to remove any personal information that they

>> do not want to be made public.

>>

>>

>> conduit mailing list

>> conduit@xxxxxxxxxxxxxxxx

>> For list information or to unsubscribe, visit:

>> http://www.unidata.ucar.edu/mailing_lists/

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fwww.unidata.ucar.edu%2Fmailing_lists%2F&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262209822&sdata=wPUwExB77bt53ZiYByOJ40e%2FQAP1ByJYo3bDTbubgZI%3D&reserved=0>

>>

>>

>>

>> --

>> ----

>>

>> Gilbert Sebenste

>> Consulting Meteorologist

>> AllisonHouse, LLC

>> _______________________________________________

>> Ncep.list.pmb-dataflow mailing list

>> Ncep.list.pmb-dataflow@xxxxxxxxxxxxxxxxxxxx

>> https://www.lstsrv.ncep.noaa.gov/mailman/listinfo/ncep.list.pmb-dataflow

>> <https://nam01.safelinks.protection.outlook.com/?url=https%3A%2F%2Fwww.lstsrv.ncep.noaa.gov%2Fmailman%2Flistinfo%2Fncep.list.pmb-dataflow&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262219827&sdata=wQavNwdnZST2BGlnWXTo0cM7yCZKfC89ELJVz6I9wTA%3D&reserved=0>

>>

>>

>>

>> --

>> Derek Van Pelt

>> DataFlow Analyst

>> NOAA/NCEP/NCO

>>

>>

>>

>> --

>> Carissa Klemmer

>> NCEP Central Operations

>> IDSB Branch Chief

>> 301-683-3835

>>

>> _______________________________________________

>> NOTE: All exchanges posted to Unidata maintained email lists are

>> recorded in the Unidata inquiry tracking system and made publicly

>> available through the web. Users who post to any of the lists we

>> maintain are reminded to remove any personal information that they

>> do not want to be made public.

>>

>>

>> conduit mailing list

>> conduit@xxxxxxxxxxxxxxxx

>> For list information or to unsubscribe, visit:

>> http://www.unidata.ucar.edu/mailing_lists/

>> <https://nam01.safelinks.protection.outlook.com/?url=http%3A%2F%2Fwww.unidata.ucar.edu%2Fmailing_lists%2F&data=02%7C01%7Caap1%40psu.edu%7C03f4b8f758bb49bc0bb408d6bd00fc16%7C7cf48d453ddb4389a9c1c115526eb52e%7C0%7C0%7C636904209262229827&sdata=J4CywBaeCryhk3m%2F912pEFCmFpsdz%2BUVxwcMXguJXik%3D&reserved=0>

>>

>>

>>

>> --

>> Derek Van Pelt

>> DataFlow Analyst

>> NOAA/NCEP/NCO

>> --

>> Misspelled straight from Derek's phone.

>> _______________________________________________

>> NOTE: All exchanges posted to Unidata maintained email lists are

>> recorded in the Unidata inquiry tracking system and made publicly

>> available through the web. Users who post to any of the lists we

>> maintain are reminded to remove any personal information that they

>> do not want to be made public.

>>

>>

>> conduit mailing list

>> conduit@xxxxxxxxxxxxxxxx

>> For list information or to unsubscribe, visit:

>> http://www.unidata.ucar.edu/mailing_lists/

>

> _______________________________________________

> Ncep.list.pmb-dataflow mailing list

> Ncep.list.pmb-dataflow@xxxxxxxxxxxxxxxxxxxx

> https://www.lstsrv.ncep.noaa.gov/mailman/listinfo/ncep.list.pmb-dataflow

>

--

Dustin Sheffler

NCEP Central Operations - Dataflow

<%28301%29%20683-1400> 5830 University Research Court, Rm 1030

College Park, Maryland 20740

Office: (301) 683-3827 <%28301%29%20683-1400>

<%28301%29%20683-1400>