Pete,

I'm afraid at this time I won't be able to have anyone troubleshoot the

ldm0 boulder conduit. In less than 2 months those boxes will be taken

offline. I actually wasn't aware that anyone was still pulling from them

instead of conduit.ncep. We will make sure and give this list a heads up

when the date is officially set for those going offline.

Carissa Klemmer

NCEP Central Operations

Dataflow Team Lead

301-683-3835

On Fri, Feb 26, 2016 at 11:58 AM, Pete Pokrandt <poker@xxxxxxxxxxxx> wrote:

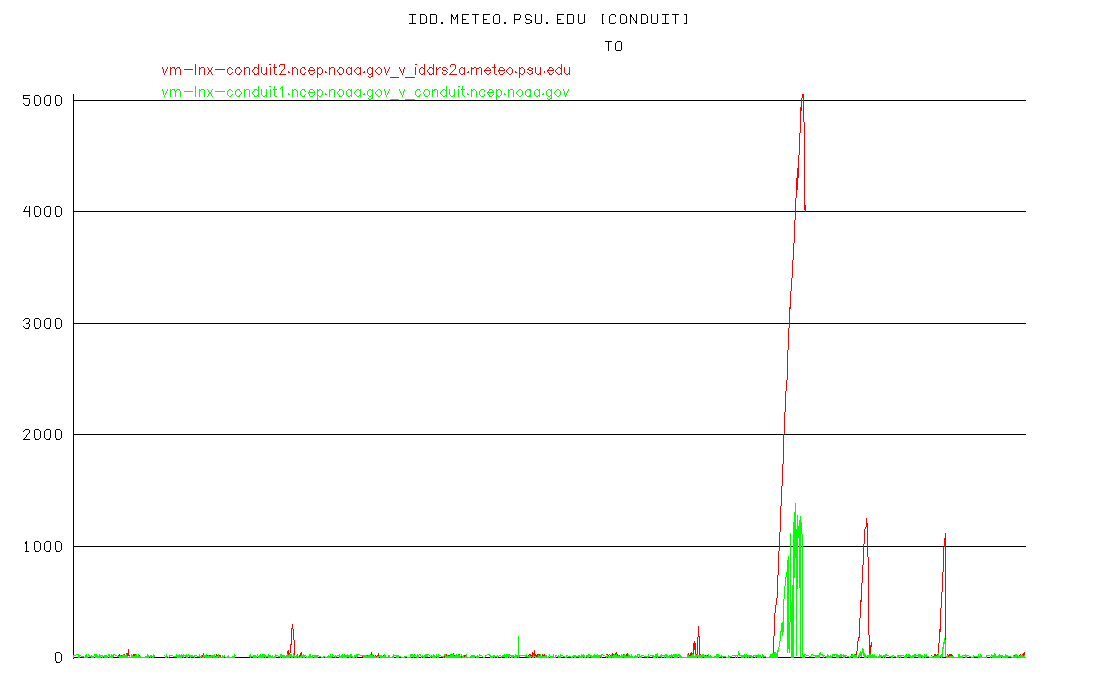

> No change for me from yesterday. That is - the traceroutes look about the

> same as they always have, but data is flowing just fine. My conduit latency

> graph is attached. Time goes from left to right, current (1753 UTC) is on

> the far right. The place where the higher red line latencies end is

> yesterday when I stopped feeding from idd.unidata.ucar.edu and

> switched back to conduit.ncep.noaa.gov (and kept ncepldm4.woc.noaa.gov on

> also)

>

>

> traceroute from idd.aos.wisc.edu to conduit.ncep.noaa.gov

> (140.90.101.42), 30 hops max, 60 byte packets

> 1 r-cssc-b280c-1-core-vlan-510-primary.net.wisc.edu (144.92.130.3)

> 1.063 ms 1.046 ms 1.054 ms

> 2 internet2-ord-600w-100G.net.wisc.edu (144.92.254.229) 18.454 ms

> 18.457 ms 18.430 ms

> 3 et-10-0-0.107.rtr.clev.net.internet2.edu (198.71.45.9) 28.127 ms

> 28.138 ms 28.218 ms

> 4 et-11-3-0-1276.clpk-core.maxgigapop.net (206.196.177.4) 37.519 ms

> 37.724 ms 37.684 ms

> 5 noaa-i2.demarc.maxgigapop.net (206.196.177.118) 38.073 ms 38.388 ms

> 38.183 ms

> 6 140.90.111.36 (140.90.111.36) 37.787 ms 38.047 ms 39.968 ms

> 7 140.90.76.69 (140.90.76.69) 38.319 ms 37.895 ms 37.862 ms

> 8 * * *

> 9 * * *

>

> Pete

>

>

>

> <http://www.weather.com/tv/shows/wx-geeks/video/the-incredible-shrinking-cold-pool>

> --

> Pete Pokrandt - Systems Programmer

> UW-Madison Dept of Atmospheric and Oceanic Sciences

> 608-262-3086 - poker@xxxxxxxxxxxx

>

>

>

> ------------------------------

> *From:* conduit-bounces@xxxxxxxxxxxxxxxx <conduit-bounces@xxxxxxxxxxxxxxxx>

> on behalf of Carissa Klemmer - NOAA Federal <carissa.l.klemmer@xxxxxxxx>

> *Sent:* Friday, February 26, 2016 6:42 AM

> *To:* Bob Lipschutz - NOAA Affiliate

> *Cc:* Bentley, Alicia M; support-conduit@xxxxxxxxxxxxxxxx;

> _NCEP.List.pmb-dataflow; _NOAA Boulder NOC; Daes Support

> *Subject:* Re: [conduit] [Ncep.list.pmb-dataflow] Large CONDUIT latencies

> to UW-Madison idd.aos.wisc.edu starting the last day or two.

>

> Hi All,

>

> This is unfortunate news. We have already notified the network team on

> this, but if possible can you send traceroutes please so I can provide more

> troubleshooting points.

>

> Carissa Klemmer

> NCEP Central Operations

> Dataflow Team Lead

> 301-683-3835

>

> On Thu, Feb 25, 2016 at 7:22 PM, Bob Lipschutz - NOAA Affiliate <

> robert.c.lipschutz@xxxxxxxx> wrote:

>

>> As another point on the curve, we have also been seeing high latency with

>> the NCEP MRMS LDM feed, mrms-ldmout.ncep.noaa.gov this afternoon.

>> Products were running about 30 minutes behind. Around 2330Z the feed

>> started catching up, and it seems to be current at the moment.

>>

>> -Bob

>>

>>

>> On Thu, Feb 25, 2016 at 11:11 PM, Arthur A Person <aap1@xxxxxxx> wrote:

>>

>>> Mike,

>>>

>>> Correction to what I said below... the points we tested to actually we

>>> *re* within NOAA, but we weren't sure how close they were to the

>>> CONDUIT source. We got throughputs of approximately 2.5-3.5 Gbps.

>>>

>>> Art

>>>

>>> ------------------------------

>>>

>>> *From: *"Arthur A Person" <aap1@xxxxxxx>

>>> *To: *"Mike Dross" <mwdross@xxxxxxxxx>

>>> *Cc: *"Bentley, Alicia M" <ambentley@xxxxxxxxxx>, "

>>> support-conduit@xxxxxxxxxxxxxxxx" <conduit@xxxxxxxxxxxxxxxx>,

>>> "_NCEP.List.pmb-dataflow" <ncep.list.pmb-dataflow@xxxxxxxx>, "_NOAA

>>> Boulder NOC" <nb-noc@xxxxxxxx>, "Daes Support" <daessupport@xxxxxxxxxx>

>>> *Sent: *Thursday, February 25, 2016 5:58:58 PM

>>>

>>> *Subject: *Re: [conduit] Large CONDUIT latencies to UW-Madison

>>> idd.aos.wisc.edu starting the last day or two.

>>>

>>> Mike,

>>>

>>> Our network folks have done this with tests to the MAX gigaPOP and have

>>> gotten good results. We'd like to test all the way to NCEP, but as far as

>>> I know, there isn't a publicly available test point within NCEP to do this.

>>>

>>> Art

>>>

>>> ------------------------------

>>>

>>> *From: *"Mike Dross" <mwdross@xxxxxxxxx>

>>> *To: *"Bob Lipschutz - NOAA Affiliate" <robert.c.lipschutz@xxxxxxxx>

>>> *Cc: *"Arthur A Person" <aap1@xxxxxxx>, "Bentley, Alicia M" <

>>> ambentley@xxxxxxxxxx>, "support-conduit@xxxxxxxxxxxxxxxx" <

>>> conduit@xxxxxxxxxxxxxxxx>, "_NCEP.List.pmb-dataflow" <

>>> ncep.list.pmb-dataflow@xxxxxxxx>, "_NOAA Boulder NOC" <nb-noc@xxxxxxxx>,

>>> "Daes Support" <daessupport@xxxxxxxxxx>

>>> *Sent: *Thursday, February 25, 2016 5:53:26 PM

>>> *Subject: *Re: [conduit] Large CONDUIT latencies to UW-Madison

>>> idd.aos.wisc.edu starting the last day or two.

>>>

>>> I highly suggest you guys setup an iperf test to measure the actual

>>> bandwidth and latency between your sites. This can help eliminate LDM as

>>> the problem.

>>>

>>> https://iperf.fr/

>>>

>>> Very easy to setup.

>>>

>>> -Mike

>>>

>>> On Thu, Feb 25, 2016 at 5:32 PM, Bob Lipschutz - NOAA Affiliate <

>>> robert.c.lipschutz@xxxxxxxx> wrote:

>>>

>>>> ftp transfers rates to NOAA/ESRL/GSD have also fallen way off... as bad

>>>> as I've ever seen. E.g.,

>>>> curl

>>>> ftp://ftpprd.ncep.noaa.gov/pub/data/nccf/com/gfs/prod/gfs.2016022312/gfs.t12z.pgrb2.0p25.f012

>>>> -o /dev/null

>>>> % Total % Received % Xferd Average Speed Time Time Time

>>>> Current

>>>> Dload Upload Total Spent Left

>>>> Speed

>>>> 12 214M 12 25.7M 0 0 279k 0 0:13:04 0:01:34

>>>> 0:11:30 224k

>>>>

>>>> -Bob

>>>>

>>>>

>>>> On Thu, Feb 25, 2016 at 10:23 PM, Arthur A Person <aap1@xxxxxxx> wrote:

>>>>

>>>>> Folks,

>>>>>

>>>>> Looks like our feed from conduit.ncep.noaa.gov is seeing delays again

>>>>> beginning last evening with the 00Z reception of the gfs 0p25 degree data:

>>>>>

>>>>> Art

>>>>>

>>>>>

>>>>> ------------------------------

>>>>>

>>>>> *From: *"Carissa Klemmer - NOAA Federal" <carissa.l.klemmer@xxxxxxxx>

>>>>> *To: *admin@xxxxxxxx

>>>>> *Cc: *"Bentley, Alicia M" <ambentley@xxxxxxxxxx>, "Michael Schmidt" <

>>>>> mschmidt@xxxxxxxx>, conduit@xxxxxxxxxxxxxxxx,

>>>>> "_NCEP.List.pmb-dataflow" <ncep.list.pmb-dataflow@xxxxxxxx>, "Daes

>>>>> Support" <daessupport@xxxxxxxxxx>

>>>>> *Sent: *Monday, February 22, 2016 1:57:21 PM

>>>>> *Subject: *Re: [conduit] Large CONDUIT latencies to UW-Madison

>>>>> idd.aos.wisc.edu starting the last day or two.

>>>>>

>>>>> Keep providing the feedback. All of it is being given to the NOAA NOC

>>>>> admins. This seems to be a pretty wide spread issue with numerous circuits

>>>>> being impacted. As soon as I have more information about resolution I will

>>>>> pass it on.

>>>>>

>>>>> Carissa Klemmer

>>>>> NCEP Central Operations

>>>>> Dataflow Team Lead

>>>>> 301-683-3835

>>>>>

>>>>> On Mon, Feb 22, 2016 at 1:32 PM, <admin@xxxxxxxx> wrote:

>>>>>

>>>>>> Those traceroutes clearly show the issue is on the last 2 hops or

>>>>>> inside NOAA.

>>>>>>

>>>>>> Ray

>>>>>>

>>>>>> On Friday, February 19, 2016 2:39pm, "Gerry Creager - NOAA Affiliate"

>>>>>> <gerry.creager@xxxxxxxx> said:

>>>>>>

>>>>>> > _______________________________________________

>>>>>> > conduit mailing list

>>>>>> > conduit@xxxxxxxxxxxxxxxx

>>>>>> > For list information or to unsubscribe, visit:

>>>>>> > http://www.unidata.ucar.edu/mailing_lists/ If I were the

>>>>>> conspiracy theory type, I

>>>>>> > might think to blame the

>>>>>> > balkanization of all paths to the internet from NOAA sites via the

>>>>>> Trusted

>>>>>> > Internet Connection stuff. But I'm just hypothesizing. Or, they

>>>>>> could be

>>>>>> > running distro on overloaded VMs.

>>>>>> >

>>>>>> > gerry

>>>>>> >

>>>>>> > On Fri, Feb 19, 2016 at 1:28 PM, Patrick L. Francis <

>>>>>> wxprofessor@xxxxxxxxx>

>>>>>> > wrote:

>>>>>> >

>>>>>> >>

>>>>>> >>

>>>>>> >> Art / Pete etc. al. J

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> There seems to be a consistency is potential packet loss from no

>>>>>> matter

>>>>>> >> which route is taken into ncep… so whoever you are communicating

>>>>>> with,

>>>>>> >> you

>>>>>> >> might have them investigate 140.90.111.36… reference the previous

>>>>>> graphic

>>>>>> >> shown and this new one here:

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> http://drmalachi.org/files/ncep/ec2-ncep.png

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> if you are unfamiliar with amazon ec2 routing, the first.. twenty

>>>>>> >> something or so hops are just internal to amazon, and they don’t

>>>>>> jump

>>>>>> >> outside until you hit the internet2 hops, which then jump to

>>>>>> gigapop, and

>>>>>> >> from there to noaa internal.. so since this amazon box is in

>>>>>> ashburn,

>>>>>> >> physically it’s close, and has limited interruptions until that

>>>>>> point..

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> the same hop causes more severe problems from my colo boxes, which

>>>>>> are

>>>>>> >> hurricane electric direct, which means that in those cases jumping

>>>>>> from

>>>>>> >> hurricane electric to 140.90.111.36 has “severe” problems

>>>>>> (including

>>>>>> >> packet

>>>>>> >> loss) while jumping from amazon to I2 to gigapop to 140.90.111.36

>>>>>> also

>>>>>> >> encounters issues, but not as severe..

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> hopefully this may help J Happy Friday J

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> cheers,

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> --patrick

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> -------------------------------------------------------

>>>>>> >>

>>>>>> >> Patrick L. Francis

>>>>>> >>

>>>>>> >> Vice President of Research & Development

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> Aeris Weather

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> http://aerisweather.com/

>>>>>> >>

>>>>>> >> http://modelweather.com/

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> http://facebook.com/wxprofessor/

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> --------------------------------------------------------

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> *From:* conduit-bounces@xxxxxxxxxxxxxxxx [mailto:

>>>>>> >> conduit-bounces@xxxxxxxxxxxxxxxx] *On Behalf Of *Arthur A Person

>>>>>> >> *Sent:* Friday, February 19, 2016 1:57 PM

>>>>>> >> *To:* Pete Pokrandt <poker@xxxxxxxxxxxx>

>>>>>> >> *Cc:* Bentley, Alicia M <ambentley@xxxxxxxxxx>; Michael Schmidt <

>>>>>> >> mschmidt@xxxxxxxx>; support-conduit@xxxxxxxxxxxxxxxx <

>>>>>> >> conduit@xxxxxxxxxxxxxxxx>; _NCEP.List.pmb-dataflow <

>>>>>> >> ncep.list.pmb-dataflow@xxxxxxxx>; Daes Support <

>>>>>> daessupport@xxxxxxxxxx>

>>>>>> >> *Subject:* Re: [conduit] Large CONDUIT latencies to UW-Madison

>>>>>> >> idd.aos.wisc.edu starting the last day or two.

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> Pete,

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> We've been struggling with latencies for months to the point where

>>>>>> I've

>>>>>> >> been feeding gfs 0p25 from NCEP and the rest from Unidata... that

>>>>>> is, up

>>>>>> >> untl Feb 10th. The afternoon of the 10th, our latencies to NCEP

>>>>>> dropped to

>>>>>> >> what I consider "normal", an average maximum latency of about 30

>>>>>> seconds.

>>>>>> >> Our networking folks and NCEP have been trying to identify what

>>>>>> this

>>>>>> >> problem was, but as far as I know, no problem has been identified

>>>>>> or action

>>>>>> >> taken. So, it appears it's all buried in the mysteries of the

>>>>>> internet.

>>>>>> >> I've switched data collection back to NCEP at this point, but I'm

>>>>>> on the

>>>>>> >> edge of my seat waiting to see if it reverts back to the old

>>>>>> behavior...

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> Art

>>>>>> >>

>>>>>> >>

>>>>>> >> ------------------------------

>>>>>> >>

>>>>>> >> *From: *"Pete Pokrandt" <poker@xxxxxxxxxxxx>

>>>>>> >> *To: *"Carissa Klemmer - NOAA Federal" <carissa.l.klemmer@xxxxxxxx

>>>>>> >,

>>>>>> >> "Arthur A Person" <aap1@xxxxxxx>, "_NCEP.List.pmb-dataflow" <

>>>>>> >> ncep.list.pmb-dataflow@xxxxxxxx>

>>>>>> >> *Cc: *"support-conduit@xxxxxxxxxxxxxxxx" <conduit@xxxxxxxxxxxxxxxx

>>>>>> >,

>>>>>> >> "Michael Schmidt" <mschmidt@xxxxxxxx>, "Bentley, Alicia M" <

>>>>>> >> ambentley@xxxxxxxxxx>, "Daes Support" <daessupport@xxxxxxxxxx>

>>>>>> >> *Sent: *Friday, February 19, 2016 12:20:20 PM

>>>>>> >> *Subject: *Large CONDUIT latencies to UW-Madison idd.aos.wisc.edu

>>>>>> >> starting the last day or two.

>>>>>> >>

>>>>>> >> All,

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> Not sure if this is on my end or somewhere upstream, but the last

>>>>>> several

>>>>>> >> runs my CONDUIT latencies have been getting huge to the point

>>>>>> where we are

>>>>>> >> losing data.

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> I did stop my ldm the other day to add in an alternate feed for

>>>>>> Gilbert at

>>>>>> >> allisonhous.com, not sure if that pushed me over a bandwidth

>>>>>> limit, or by

>>>>>> >> reconnecting we got hooked up to a different remote ldm, or taking

>>>>>> a

>>>>>> >> different path, that shot the latencies up.

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> Seems to be really only CONDUIT, none of our other feeds show this

>>>>>> kind of

>>>>>> >> latency.

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> Still looking into things locally, but wanted make people aware. I

>>>>>> just

>>>>>> >> rebooted idd.aos.wisc.edu, will see if that helps at all.

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> Here's an ldmping and traceroute from idd.aos.wisc.edu to

>>>>>> >> conduit.ncep.noaa.gov.

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> [ldm@idd ~]$ ldmping conduit.ncep.noaa.gov

>>>>>> >>

>>>>>> >> Feb 19 17:16:08 INFO: State Elapsed Port Remote_Host

>>>>>> >> rpc_stat

>>>>>> >>

>>>>>> >> Feb 19 17:16:08 INFO: Resolving conduit.ncep.noaa.gov to

>>>>>> 140.90.101.42

>>>>>> >> took 0.00486 seconds

>>>>>> >>

>>>>>> >> Feb 19 17:16:08 INFO: RESPONDING 0.115499 388

>>>>>> conduit.ncep.noaa.gov

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> traceroute to conduit.ncep.noaa.gov (140.90.101.42), 30 hops max,

>>>>>> 60 byte

>>>>>> >> packets

>>>>>> >>

>>>>>> >> 1 r-cssc-b280c-1-core-vlan-510-primary.net.wisc.edu

>>>>>> (144.92.130.3)

>>>>>> >> 0.760 ms 0.954 ms 0.991 ms

>>>>>> >>

>>>>>> >> 2 internet2-ord-600w-100G.net.wisc.edu (144.92.254.229) 18.119

>>>>>> ms

>>>>>> >> 18.123 ms 18.107 ms

>>>>>> >>

>>>>>> >> 3 et-10-0-0.107.rtr.clev.net.internet2.edu (198.71.45.9)

>>>>>> 27.836 ms

>>>>>> >> 27.852 ms 27.838 ms

>>>>>> >>

>>>>>> >> 4 et-11-3-0-1276.clpk-core.maxgigapop.net (206.196.177.4)

>>>>>> 37.363 ms

>>>>>> >> 37.363 ms 37.345 ms

>>>>>> >>

>>>>>> >> 5 noaa-i2.demarc.maxgigapop.net (206.196.177.118) 38.051 ms

>>>>>> 38.254 ms

>>>>>> >> 38.401 ms

>>>>>> >>

>>>>>> >> 6 140.90.111.36 (140.90.111.36) 118.042 ms 118.412 ms 118.529

>>>>>> ms

>>>>>> >>

>>>>>> >> 7 140.90.76.69 (140.90.76.69) 41.764 ms 40.343 ms 40.500 ms

>>>>>> >>

>>>>>> >> 8 * * *

>>>>>> >>

>>>>>> >> 9 * * *

>>>>>> >>

>>>>>> >> 10 * * *

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> Similarly to ncepldm

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> [ldm@idd ~]$ ldmping ncepldm4.woc.noaa.gov

>>>>>> >>

>>>>>> >> Feb 19 17:18:40 INFO: State Elapsed Port Remote_Host

>>>>>> >> rpc_stat

>>>>>> >>

>>>>>> >> Feb 19 17:18:40 INFO: Resolving ncepldm4.woc.noaa.gov to

>>>>>> 140.172.17.205

>>>>>> >> took 0.001599 seconds

>>>>>> >>

>>>>>> >> Feb 19 17:18:40 INFO: RESPONDING 0.088901 388

>>>>>> ncepldm4.woc.noaa.gov

>>>>>> >>

>>>>>> >> ^C

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> [ldm@idd ~]$ traceroute ncepldm4.woc.noaa.gov

>>>>>> >>

>>>>>> >> traceroute to ncepldm4.woc.noaa.gov (140.172.17.205), 30 hops

>>>>>> max, 60

>>>>>> >> byte packets

>>>>>> >>

>>>>>> >> 1 r-cssc-b280c-1-core-vlan-510-primary.net.wisc.edu

>>>>>> (144.92.130.3)

>>>>>> >> 0.730 ms 0.831 ms 0.876 ms

>>>>>> >>

>>>>>> >> 2 internet2-ord-600w-100G.net.wisc.edu (144.92.254.229) 18.092

>>>>>> ms

>>>>>> >> 18.092 ms 18.080 ms

>>>>>> >>

>>>>>> >> 3 ae0.3454.core-l3.frgp.net (192.43.217.223) 40.196 ms 40.226

>>>>>> ms

>>>>>> >> 40.256 ms

>>>>>> >>

>>>>>> >> 4 noaa-i2.frgp.net (128.117.243.11) 40.970 ms 41.012 ms

>>>>>> 40.996 ms

>>>>>> >>

>>>>>> >> 5 2001-mlx8-eth-1-2.boulder.noaa.gov (140.172.2.18) 42.780 ms

>>>>>> 42.778

>>>>>> >> ms 42.764 ms

>>>>>> >>

>>>>>> >> 6 mdf-rtr-6.boulder.noaa.gov (140.172.6.251) 40.869 ms 40.922

>>>>>> ms

>>>>>> >> 40.946 ms

>>>>>> >>

>>>>>> >> 7 * * *

>>>>>> >>

>>>>>> >> 8 * * *

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> Pete

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> --

>>>>>> >> Pete Pokrandt - Systems Programmer

>>>>>> >> UW-Madison Dept of Atmospheric and Oceanic Sciences

>>>>>> >> 608-262-3086 - poker@xxxxxxxxxxxx

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >>

>>>>>> >> --

>>>>>> >>

>>>>>> >> Arthur A. Person

>>>>>> >> Research Assistant, System Administrator

>>>>>> >> Penn State Department of Meteorology

>>>>>> >> email: aap1@xxxxxxx, phone: 814-863-1563

>>>>>> >>

>>>>>> >> _______________________________________________

>>>>>> >> conduit mailing list

>>>>>> >> conduit@xxxxxxxxxxxxxxxx

>>>>>> >> For list information or to unsubscribe, visit:

>>>>>> >> http://www.unidata.ucar.edu/mailing_lists/

>>>>>> >>

>>>>>> >

>>>>>> >

>>>>>> >

>>>>>> > --

>>>>>> > Gerry Creager

>>>>>> > NSSL/CIMMS

>>>>>> > 405.325.6371

>>>>>> > ++++++++++++++++++++++

>>>>>> > “Big whorls have little whorls,

>>>>>> > That feed on their velocity;

>>>>>> > And little whorls have lesser whorls,

>>>>>> > And so on to viscosity.”

>>>>>> > Lewis Fry Richardson (1881-1953)

>>>>>> >

>>>>>>

>>>>>>

>>>>>> _______________________________________________

>>>>>> conduit mailing list

>>>>>> conduit@xxxxxxxxxxxxxxxx

>>>>>> For list information or to unsubscribe, visit:

>>>>>> http://www.unidata.ucar.edu/mailing_lists/

>>>>>>

>>>>>

>>>>> _______________________________________________

>>>>> conduit mailing list

>>>>> conduit@xxxxxxxxxxxxxxxx

>>>>> For list information or to unsubscribe, visit:

>>>>> http://www.unidata.ucar.edu/mailing_lists/

>>>>>

>>>>>

>>>>> --

>>>>> Arthur A. Person

>>>>> Research Assistant, System Administrator

>>>>> Penn State Department of Meteorology

>>>>> email: aap1@xxxxxxx, phone: 814-863-1563

>>>>>

>>>>> _______________________________________________

>>>>> conduit mailing list

>>>>> conduit@xxxxxxxxxxxxxxxx

>>>>> For list information or to unsubscribe, visit:

>>>>> http://www.unidata.ucar.edu/mailing_lists/

>>>>>

>>>>

>>>>

>>>> _______________________________________________

>>>> conduit mailing list

>>>> conduit@xxxxxxxxxxxxxxxx

>>>> For list information or to unsubscribe, visit:

>>>> http://www.unidata.ucar.edu/mailing_lists/

>>>>

>>>

>>>

>>> --

>>> Arthur A. Person

>>> Research Assistant, System Administrator

>>> Penn State Department of Meteorology

>>> email: aap1@xxxxxxx, phone: 814-863-1563

>>>

>>> _______________________________________________

>>> conduit mailing list

>>> conduit@xxxxxxxxxxxxxxxx

>>> For list information or to unsubscribe, visit:

>>> http://www.unidata.ucar.edu/mailing_lists/

>>>

>>>

>>> --

>>> Arthur A. Person

>>> Research Assistant, System Administrator

>>> Penn State Department of Meteorology

>>> email: aap1@xxxxxxx, phone: 814-863-1563

>>>

>>> _______________________________________________

>>> conduit mailing list

>>> conduit@xxxxxxxxxxxxxxxx

>>> For list information or to unsubscribe, visit:

>>> http://www.unidata.ucar.edu/mailing_lists/

>>>

>>

>>

>> _______________________________________________

>> Ncep.list.pmb-dataflow mailing list

>> Ncep.list.pmb-dataflow@xxxxxxxxxxxxxxxxxxxx

>> https://www.lstsrv.ncep.noaa.gov/mailman/listinfo/ncep.list.pmb-dataflow

>>

>>

>