|

Derek, (cc Unidata CONDUIT support)

It looks like the 10-way split is definitely helping.

I'm not sure that whatever the original issue was has been resolved, as we still get longer lags during the 12 UTC run cycle than we used to, but the 10-way split seems to get around that so our lags remain low enough.

Unidata, and other sites feeding from conduit.ncep.ncep.gov may wish to consider using a 10-way split if they aren't already, if they are dealing with larger lags.

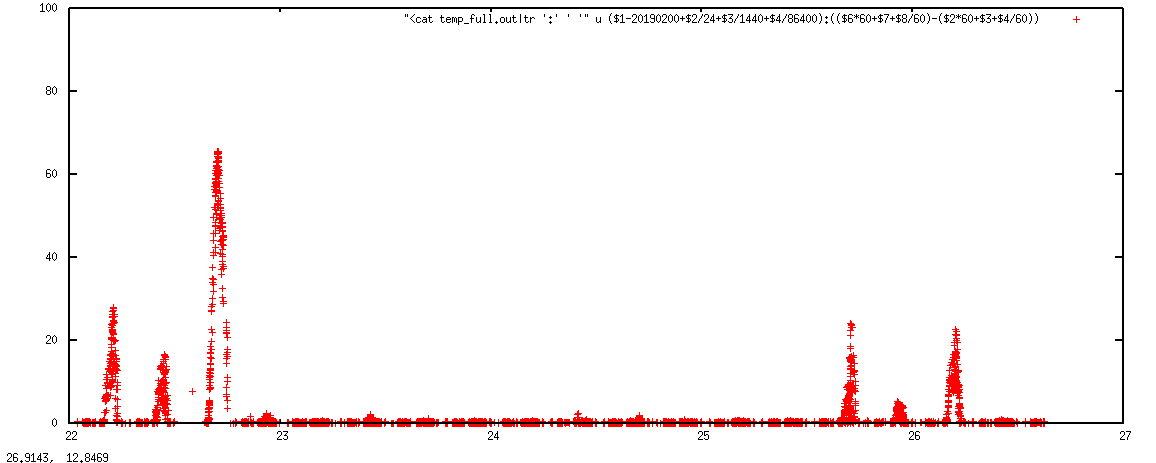

On the current rtstats graph, the large peaks just before 25/18, 26/00, and 26/06 occurred when I was using a 5-way split feed for CONDUIT. prior to the peak at 25/18, and after the peak at 26/06, I was using a 10-way split feed.

Pete

--

Pete Pokrandt - Systems Programmer

UW-Madison Dept of Atmospheric and Oceanic Sciences

608-262-3086 - address@hidden

From: Derek VanPelt - NOAA Affiliate <address@hidden>

Sent: Tuesday, February 26, 2019 9:29 AM

To: Pete Pokrandt

Cc: _NCEP.List.pmb-dataflow

Subject: Re: [Ncep.list.pmb-dataflow] Fwd: [conduit] Large lags on CONDUIT feed - started a week or so ago

Hi Pete,

Taking a look at the data overnight, it does look changing from 5 - 10 requests is making noticeable and repeatable differences

Please let me know when you have switched back to 10 separate requests again. (Have you already?).

I'd like to get a solid time-line noting your latencies and number of requests.

Hopefully you have resolved the current issues, and if we can verify that - I'd like to be able to use your example to help other customers that are seeing similar periodic latencies with LDM data

Thank you,

Derek

yrange: Latency in minutes (0-100)

xrange: Date Feb 22 - Feb 27)

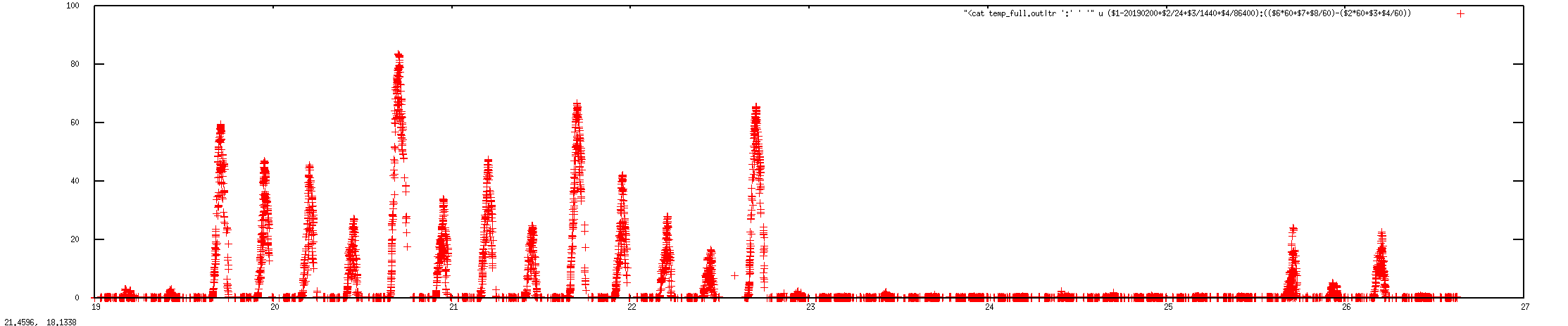

yrange in minutes (0-100)

xrange (Feb 19 - Feb 27)

Derek,

Small sample size but... My latencies were already increasing somewhat during the 12 UTC suite of runs (around 11 AM CST/17 UTC when I last emailed you) - they were around 500 seconds when I switched back from the 10 way split back to the 5 way split. The latency

increase continued until it was over 1500 seconds, so I switched back to the 10 way. The latencies got smaller, but it was also near the end of the 12 UTC suite, so I don't know if it was the 10 way split, or just the natural end of model suite/data that

brought them back down.

My plan currently is -

I'm going to change back to the 5-way split for the 18 UTC model suite, starting now and leave it that way for the entire suite to see what happens. Then I'll switch back to the 10 way for the 00 UTC set and leave it like that until tomorrow morning, and see

if there is any noticeable difference between the 5 way split 18 UTC and the 10 way split beyond.

Tomorrow, I'll let you know what I find from that test.

Pete

--

Pete Pokrandt - Systems Programmer

UW-Madison Dept of Atmospheric and Oceanic Sciences

608-262-3086 - address@hidden

From: Derek VanPelt - NOAA Affiliate <address@hidden>

Sent: Monday, February 25, 2019 11:05 AM

To: Pete Pokrandt

Subject: Re: [Ncep.list.pmb-dataflow] Fwd: [conduit] Large lags on CONDUIT feed - started a week or so ago

My quick analysis showed your latencies to be very minimal since Friday afternoon, but I don’t know if that was your change or ours. I’ll be very curious as to whether your split of the requests was the difference.

Thank you,

Derek

On Mon, Feb 25, 2019 at 12:00 PM Pete Pokrandt < address@hidden> wrote:

Derek,

I have seen lower latencies since Friday - although I also made a change on my end that could have contributed to them. I changed my request to be a 10 way split feed instead of 5 way. I was curious if something had happened on your end as well.

I'll switch it back to a 5-way and see if the latencies rise up again, and let you know.

Pete

--

Pete Pokrandt - Systems Programmer

UW-Madison Dept of Atmospheric and Oceanic Sciences

608-262-3086 - address@hidden

From: Derek VanPelt - NOAA Affiliate <address@hidden>

Sent: Monday, February 25, 2019 10:00 AM

To: Pete Pokrandt

Cc: _NCEP.List.pmb-dataflow

Subject: Re: [Ncep.list.pmb-dataflow] Fwd: [conduit] Large lags on CONDUIT feed - started a week or so ago

Hi Pete,

After our discussions last week, we had a larger disturbance on Friday that caused NCO to reboot the Conduit box. Have you seen any changes in latencies since ~16Z on Friday?

I will be running our analysis later today, but am curious if you have seen changes.

Thank you,

Derek

Interesting. I do notice that the 12 UTC run suite is the worst. I ran a traceroute from

idd-agg.aos.wisc.edu to

conduit.ncep.noaa.gov - though not during the congested data - and got this - up to the point where my pings don't get past the NCEP firewall:

[ldm@idd ~]$ traceroute

conduit.ncep.noaa.gov

2 128.104.4.129 (128.104.4.129) 1.572 ms 1.579 ms 1.912 ms

5 144.92.254.229 (144.92.254.229) 6.345 ms 6.357 ms 6.338 ms

10 140.208.63.30 (140.208.63.30) 25.097 ms 25.206 ms 25.194 ms

11 140.90.76.65 (140.90.76.65) 25.478 ms 25.588 ms 25.825 ms

12 * * *

13 * * *

14 * * *

I'll run another one when the latencies get large again and see if anything changes.

The lags are still there. I may contact the UIUC admin and see about redundantly feeding from them until this gets resolved. Or maybe I can try to stop and restart the LDM and see if it connects through a different route?

Pete

--

Pete Pokrandt - Systems Programmer

UW-Madison Dept of Atmospheric and Oceanic Sciences

608-262-3086 - address@hidden

From: Derek VanPelt - NOAA Affiliate <address@hidden>

Sent: Thursday, February 21, 2019 4:30 PM

To: Pete Pokrandt

Cc: _NCEP.List.pmb-dataflow

Subject: Re: [Ncep.list.pmb-dataflow] Fwd: [conduit] Large lags on CONDUIT feed - started a week or so ago

HI Pete,

One more data point. Looking up some of the files that were pulled very late by idd-agg, I found that same file was pulled in a timely fashion by uiuc, and inconsistent behaviour from unidata.

-bash-4.1$ grep "geavg.t12z.pgrb2af144" ldmd.log.1| grep "100 CON"| awk '{print $1, $2, $3, $5, $9 }'| column -t

Feb 20 17:02:20 xxx.uiuc.edu(feed)[7342] 20190220165633.100

Feb 20 17:42:53 xxx.unidata.ucar.edu(feed)[25947] 20190220165633.100

Feb 20 18:19:26 xxx.aos.wisc.edu(feed)[5679] 20190220165633.100

-bash-4.1$ grep "data2/ndfd/YRUZ98_KWBN_201716.grib2" ldmd.log.1| grep "100 CON"| awk '{print $1, $2, $3, $5, $9 }'| column -t

Feb 20 17:27:25 xxxx.atmos.uiuc.edu(feed)[7415] 20190220172033.100

Feb 20 18:16:38 xxx.unidata.ucar.edu(feed)[25967] 20190220172033.100

Feb 20 18:20:30 xxx.aos.wisc.edu(feed)[5677] 20190220172033.100

-bash-4.1$ grep "pgrb2a/gep06.t00z.pgrb2af342" ldmd.log.1| grep "100 CON"|grep VREL|awk '{print $1, $2, $3, $5, $9 }'

Feb 20 05:24:42 xxx.atmos.uiuc.edu(feed)[7345] 20190220052430.100

Feb 20 05:24:54 xxx.unidata.ucar.edu(feed)[25960] 20190220052430.100

Feb 20 05:52:09 xxx.aos.wisc.edu(feed)[5676] 20190220052430.100

I need to step back and figure out what this implies, but it seems likely that we are going to have to do trace routes, from each user, and start looking at this this as possibly a network issue.

Derek

PS We have seem similar issues where users getting the same LDM data get widely divergent request/arrival times on other servers.

Derek,

Thanks, that matches what I have seen. I did notice the latencies start to go up at times - I think I noticed over last weekend - but they were not so large that we were losing data, so I didn't bring it up at that point. Yesterday, when the latencies got large

enough that we were losing data, I couldn't ignore it anymore.

I can say that prior to this latest episode of large latency spikes, the latencies were consistently good, no more than 300 seconds or so, and fairly flat - similar to the periods in between the spikes - generally around 30-60 seconds or so.

Thanks again for investigating.

Pete

--

Pete Pokrandt - Systems Programmer

UW-Madison Dept of Atmospheric and Oceanic Sciences

608-262-3086 - address@hidden

From: Derek VanPelt - NOAA Affiliate <address@hidden>

Sent: Thursday, February 21, 2019 3:32 PM

To: Pete Pokrandt

Cc: _NCEP.List.pmb-dataflow

Subject: Re: [Ncep.list.pmb-dataflow] Fwd: [conduit] Large lags on CONDUIT feed - started a week or so ago

Hi Pete,

I went as deep into our logs as I could in order to try to get a longer pattern.

What I found was that there were latency spikes of up to 40 minutes going back to the 14th, and over the last two days the values got much higher. I am going be passing this on to our systems team to see if there is anything about the vm this runs from

that may need to be examined.

If we find something material we will let you know.

Thank you,

Derek

Derek,

I don't know if I have access to the data that goes into making those plots.. I'll ask if I can get it. However, based on looking at some file completion times for the 0p25 GFS and 1 degree GEFS, it looks like the latency problem began around February 13.

As a proxy, here are the times that the 12 UTC GFS finished to 384h. It was consistently 10:52-10:54 until the 12 UTC run on February 13, when it was significantly later. Also, you can see based on the much smaller file sizes that yesterdays' (Feb 20) and

today's (Feb 21) did not come in completely.

[ldm@thredds Global_0p25deg]$ ls -l *_1200.grib2

-rw-r--r--. 1 ldm ldm 19409515250 Feb 7 10:53 GFS_Global_0p25deg_20190207_1200.grib2

-rw-r--r--. 1 ldm ldm 19591317136 Feb 8 10:52 GFS_Global_0p25deg_20190208_1200.grib2

-rw-r--r--. 1 ldm ldm 19639007022 Feb 9 10:52 GFS_Global_0p25deg_20190209_1200.grib2

-rw-r--r--. 1 ldm ldm 19827927236 Feb 10 10:52 GFS_Global_0p25deg_20190210_1200.grib2

-rw-r--r--. 1 ldm ldm 19777968918 Feb 11 10:50 GFS_Global_0p25deg_20190211_1200.grib2

-rw-r--r--. 1 ldm ldm 19452902032 Feb 12 10:54 GFS_Global_0p25deg_20190212_1200.grib2

-rw-r--r--. 1 ldm ldm 19446859186 Feb 13 11:39 GFS_Global_0p25deg_20190213_1200.grib2

-rw-r--r--. 1 ldm ldm 18968196003 Feb 14 11:30 GFS_Global_0p25deg_20190214_1200.grib2

-rw-r--r--. 1 ldm ldm 18910899941 Feb 15 11:31 GFS_Global_0p25deg_20190215_1200.grib2

-rw-r--r--. 1 ldm ldm 19131890024 Feb 16 10:55 GFS_Global_0p25deg_20190216_1200.grib2

-rw-r--r--. 1 ldm ldm 18975852593 Feb 17 11:18 GFS_Global_0p25deg_20190217_1200.grib2

-rw-r--r--. 1 ldm ldm 19016491273 Feb 18 11:05 GFS_Global_0p25deg_20190218_1200.grib2

-rw-r--r--. 1 ldm ldm 19060104520 Feb 19 11:49 GFS_Global_0p25deg_20190219_1200.grib2

-rw-r--r--. 1 ldm ldm 12913559580 Feb 20 11:28 GFS_Global_0p25deg_20190220_1200.grib2

-rw-r--r--. 1 ldm ldm 17933235888 Feb 21 11:45 GFS_Global_0p25deg_20190221_1200.grib2

Here's the same information for the 1 degree GEFS full forecast. Same story - they were consistently done by 11:29-11:32 until the 12 UTC Feb 13 run where it started finishing much later.

[ldm@thredds members]$ ls -l *_1200.grib2

-rw-r--r--. 1 ldm ldm 5764678691 Feb 7 11:32 GEFS_Global_1p0deg_Ensemble_20190207_1200.grib2

-rw-r--r--. 1 ldm ldm 5766998913 Feb 8 11:32 GEFS_Global_1p0deg_Ensemble_20190208_1200.grib2

-rw-r--r--. 1 ldm ldm 5776900608 Feb 9 11:31 GEFS_Global_1p0deg_Ensemble_20190209_1200.grib2

-rw-r--r--. 1 ldm ldm 5792085497 Feb 10 11:32 GEFS_Global_1p0deg_Ensemble_20190210_1200.grib2

-rw-r--r--. 1 ldm ldm 5776914560 Feb 11 11:29 GEFS_Global_1p0deg_Ensemble_20190211_1200.grib2

-rw-r--r--. 1 ldm ldm 5760837077 Feb 12 11:31 GEFS_Global_1p0deg_Ensemble_20190212_1200.grib2

-rw-r--r--. 1 ldm ldm 5790736476 Feb 13 11:53 GEFS_Global_1p0deg_Ensemble_20190213_1200.grib2

-rw-r--r--. 1 ldm ldm 5786379027 Feb 14 11:49 GEFS_Global_1p0deg_Ensemble_20190214_1200.grib2

-rw-r--r--. 1 ldm ldm 5788285463 Feb 15 11:49 GEFS_Global_1p0deg_Ensemble_20190215_1200.grib2

-rw-r--r--. 1 ldm ldm 5782621650 Feb 16 11:29 GEFS_Global_1p0deg_Ensemble_20190216_1200.grib2

-rw-r--r--. 1 ldm ldm 5773223312 Feb 17 11:36 GEFS_Global_1p0deg_Ensemble_20190217_1200.grib2

-rw-r--r--. 1 ldm ldm 5780145457 Feb 18 11:30 GEFS_Global_1p0deg_Ensemble_20190218_1200.grib2

-rw-r--r--. 1 ldm ldm 5775267656 Feb 19 12:13 GEFS_Global_1p0deg_Ensemble_20190219_1200.grib2

-rw-r--r--. 1 ldm ldm 1316273147 Feb 20 12:28 GEFS_Global_1p0deg_Ensemble_20190220_1200.grib2

-rw-r--r--. 1 ldm ldm 4143987046 Feb 21 12:16 GEFS_Global_1p0deg_Ensemble_20190221_1200.grib2

Pete

--

Pete Pokrandt - Systems Programmer

UW-Madison Dept of Atmospheric and Oceanic Sciences

608-262-3086 - address@hidden

From: Derek VanPelt - NOAA Affiliate <address@hidden>

Sent: Thursday, February 21, 2019 1:52 PM

To: Pete Pokrandt

Subject: Re: [Ncep.list.pmb-dataflow] Fwd: [conduit] Large lags on CONDUIT feed - started a week or so ago

Hi Pete,

Can you identify exactly which day you started seeing latency? A lot of additional load was put into College Park a week plus ago, and if that was when the latency started showing up that would give us a pretty solid idea as to what is going on now.

The graphs you shared go back to the 19th, but if the latency started earlier that changes our analysis greatly.

Thank you,

Derek

PS I can see your latency in our send logs -- but they only go back about 5 days, which may not be enough.

Derek,

Thanks for looking into the large lags. It has gotten to the point where our lags exceed 3600 seconds, and we start losing data.

I'm coming in from 144.92.131.136. My request statements look like:

I can try with a single feed request too, if that might make any difference, or split it more than it is.

Might want to check with Tom Yoksas (cc'd) with Unidata to find out what IP they are feeding from. They are experiencing large lags as well.

Thanks,

Pete

--

Pete Pokrandt - Systems Programmer

UW-Madison Dept of Atmospheric and Oceanic Sciences

608-262-3086 - address@hidden

From: Derek VanPelt - NOAA Affiliate <address@hidden>

Sent: Thursday, February 21, 2019 12:25 PM

To: Pete Pokrandt

Cc: _NCEP.List.pmb-dataflow

Subject: Re: [Ncep.list.pmb-dataflow] Fwd: [conduit] Large lags on CONDUIT feed - started a week or so ago

HI Pete,

I am looking into the latencies you are seeing. Can you provide us with the ip you are coming in from so I can start tracking this down in our CONDUIT logs?

I am also investigating the health of the vms we are using.

Thank you,

Derek

Hi Pete,

We'll take a look and see if we can figure out what might be going on. We haven't done anything to try and address this yet, but based on your analysis I'm suspicious that it might be tied to a resource constraint on the VM or the blade it resides on.

Thanks,

Holly Uhlenhake

Acting Dataflow Team Lead

On Thu, Feb 21, 2019 at 11:32 AM Pete Pokrandt < address@hidden> wrote:

Just FYI, data is flowing, but the large lags continue.

Pete

--

Pete Pokrandt - Systems Programmer

UW-Madison Dept of Atmospheric and Oceanic Sciences

608-262-3086 - address@hidden

Data is flowing again - picked up somewhere in the GEFS. Maybe CONDUIT server was restarted, or ldm on it? Lags are large (3000s+) but dropping slowly

Pete

--

Pete Pokrandt - Systems Programmer

UW-Madison Dept of Atmospheric and Oceanic Sciences

608-262-3086 - address@hidden

Just a quick follow-up - we started falling far enough behind (3600+ sec) that we are losing data. We got short files starting at 174h into the GFS run, and only got (incomplete) data through 207h.

We have now not received any data on CONDUIT since 11:27 AM CST (1727 UTC) today (Wed Feb 20)

Pete

--

Pete Pokrandt - Systems Programmer

UW-Madison Dept of Atmospheric and Oceanic Sciences

608-262-3086 - address@hidden

Carissa,

We have been feeding CONDUIT using a 5 way split feed direct from

conduit.ncep.noaa.gov, and it had been really good for some time, lags 30-60 seconds or less.

However, the past week or so, we've been seeing some very large lags during each 6 hour model suite - Unidata is also seeing these - they are also feeding direct from

conduit.ncep.noaa.gov.

Any idea what's going on, or how we can find out?

Thanks!

Pete

--

Pete Pokrandt - Systems Programmer

UW-Madison Dept of Atmospheric and Oceanic Sciences

608-262-3086 - address@hidden

_______________________________________________

NOTE: All exchanges posted to Unidata maintained email lists are

recorded in the Unidata inquiry tracking system and made publicly

available through the web. Users who post to any of the lists we

maintain are reminded to remove any personal information that they

do not want to be made public.

conduit mailing list

address@hidden

For list information or to unsubscribe, visit:

http://www.unidata.ucar.edu/mailing_lists/

_______________________________________________

Ncep.list.pmb-dataflow mailing list

address@hidden

https://www.lstsrv.ncep.noaa.gov/mailman/listinfo/ncep.list.pmb-dataflow

--

Derek Van Pelt

DataFlow Analyst

NOAA/NCEP/NCO

--

Derek Van Pelt

DataFlow Analyst

NOAA/NCEP/NCO

--

Derek Van Pelt

DataFlow Analyst

NOAA/NCEP/NCO

--

Derek Van Pelt

DataFlow Analyst

NOAA/NCEP/NCO

--

Derek Van Pelt

DataFlow Analyst

NOAA/NCEP/NCO

--

Misspelled straight from Derek's phone.

--

Derek Van Pelt

DataFlow Analyst

NOAA/NCEP/NCO

|